Due to the greater accessibility of personal and enterprise drones, it's now easier than ever to integrate them into your business. Can the same be said about traditional methods for processing aerial imagery captured with drones? As the current rate of aerial data acquisition surpasses the rate of data processing it's no surprise that many companies are flocking to artificial intelligence (AI) and deep learning to provide autonomous and extensible solutions to this problem.

These technologies are often provided by businesses as tools or services, but simply stating that a product is “AI-powered” is not enough - the companies that are looking to use these tools want to understand how they can leverage them to solve the unique problems they face.

This article lays out the various use-cases for AI in a civil engineering and land surveying context, as well as provides some necessary intuition on how this technology works behind the scenes.

There are a plethora of use-cases for AI in processing aerial imagery –all of which are centered around a shared mission of making sense of patterns found in the imagery we see.

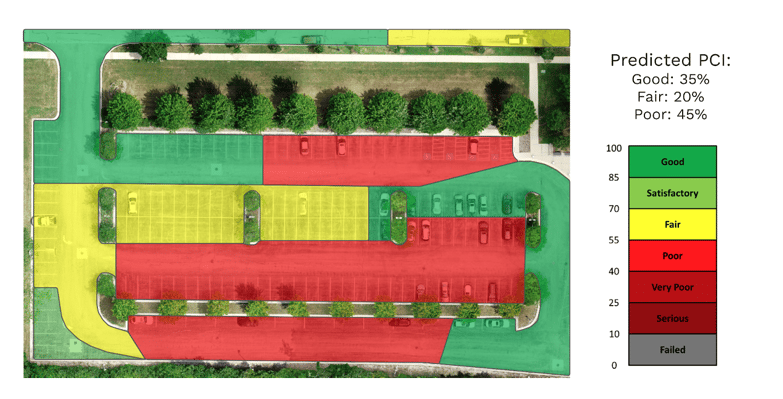

Pavement health analysis

For example, deep learning models and AI-powered software can be used to automate pavement health analysis. Instead of training a human to detect the various types of pavement deterioration (alligator cracking, patching, etc.), we can instead train a model on images of these defects to learn their differentiating features and patterns. The result is an autonomous and scalable tool that is able to not only classify sections of road on the PCI index scale but localize the specific types of defects in the imagery itself. For municipalities and other local governments that must maintain and survey roadways, this type of autonomous solution greatly expedites the task of pavement health inspection and cuts down on manual processing costs.

Object detection

Another use case for AI in aerial imagery is object detection, which provides information on the existence and location of certain objects in a site. In the image above, a deep learning model is trained to detect and locate the existence of all vehicles on the road and determine the occupancy of the parking lot. This type of model is useful for businesses that wish to monitor venue capacities and throughput. It could also be used by construction companies to monitor construction project development over time by locating various objects of interest (e.g. the installation of solar panels for renewable energy applications, or the presence of specific machinery at a construction site).

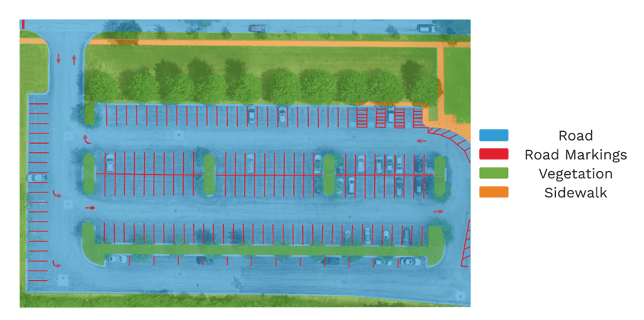

Image segmentation

The final example is image segmentation, which automatically classifies every region in the image as belonging to a predetermined class. This type of model is often used to describe the exact location and extents of particular objects and regions of interest. For example, civil engineering and land surveying firms require highly accurate drafting of projects to be within +/- 0.1 feet of the actual dimensions. Not only can this AI tool satisfy the accuracy constraints these projects demand, but it can do so at scale. This kind of technology has been used by many civil engineering and land surveying companies to expedite their manual drafting process. For them, utilizing this AI tool ensures that processing their aerial data is not the cause for delays in delivering projects.

How AI uses aerial data to learn

While AI and deep learning technology have been transforming the landscape of processing aerial data, many questions still remain on how these solutions work at their core. How does a model actually learn the differences between a road and a building? The complex mathematics behind this technology is out of the scope of this article, but the following example will elucidate the takeaway points of what you should know before adopting AI and deep learning into your business.

For deep learning, it's all about the data. The saying “garbage in, garbage out” is considered gospel in this community within the context of data, and for good reason. An AI model does not inherently understand the difference between the road and the top of a building - it is the patterns it detects in the data it sees that ‘trains’ it to differentiate them.

In plain English, deep learning models are trained to detect specific patterns in the imagery provided to them. Some of these same patterns are what experts and specialists use in their decision-making processes when manually processing aerial imagery. The major difference is that instead of training a person through higher education, classes, and certificates, we present these models with copious amounts of aerial imagery and they learn automatically.

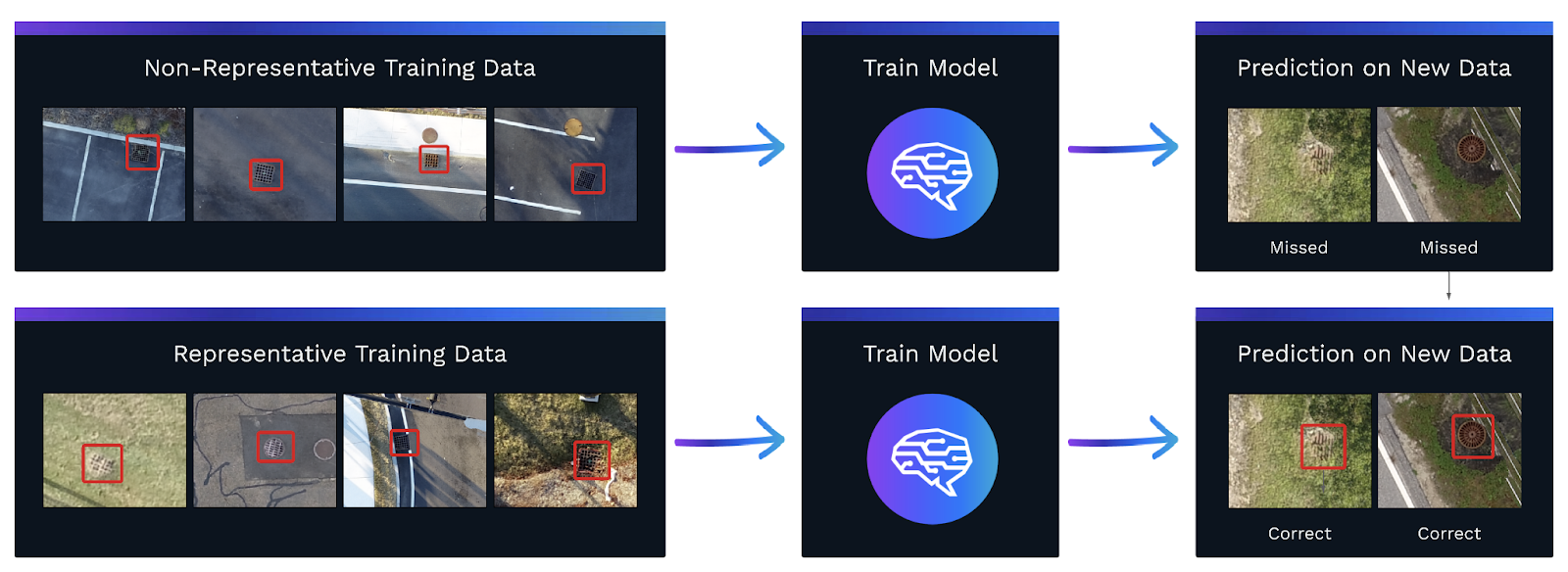

Does this mean that all we need to apply deep learning in our business is a lot of data? Not quite. Yes, we do need copious amounts of data, but that data must faithfully represent the patterns we are trying to capture. To illustrate, consider the catch basins in the above diagram. What you see is the workflow of training a model to detect catch basins in aerial imagery. On the left are two training data sets: the top set contains images of catch basins that are only found on the pavement; the bottom set contains catch basins in varied environments, circular and rectangular shapes, and with partial occlusions.

The representative training data includes examples of catch basins you might find on a normal project site, while the non-representative training data does not quite capture all the edge cases that may typically be found on project sites. Since the two new examples are of catch basins on grass, the model that was trained on a dataset that contained very little variance (the top one) missed both catch basins, while the model trained on a dataset containing higher variance (the bottom one) detected and localized both catch basins.

Making sense of deep learning and AI applications can quickly become overwhelming but is not impossible when partnering with companies that have spent years developing software to make applications more simple. Many of these companies have teams of engineers and software developers that manage the technical side of AI and deep learning so that they can provide AI-powered solutions for their clients.

No matter the specific use-case, making sense of aerial data is AirWorks’ mission statement and it is what we provide for our clients. We specialize in utilizing image segmentation technology to make sense of the information contained in aerial imagery for civil engineers and land surveying companies. Not only does our product provide industry-leading accuracy at a competitive price, but these perfectly drafted sites are then fed back into our deep learning models to iteratively improve our output. With every new site a client processes on our platform, our autonomous output inches closer to full autonomy.

To learn more, visit our website at https://airworks.io/.